Sensory and Sensorimotor Systems

The Department for Sensory and Sensorimotor Systems, also known as the Natural Intelligence Lab, has been located since October 2018 at the MPI for Biological Cybernetics. It is headed by Prof. Dr. Zhaoping Li. Our research in neuroscience aims to discover and understand how the brain receives and encodes sensory input (vision, audition, tactile sensation, and olfaction) and processes the information to direct body movements as well as to make cognitive decisions. The research is highly interdisciplinary and uses theoretical as well as experimental approaches including human psychophysics and animal behavior, fMRI, electrophysiology and computational modelling.

We seek to answer questions about attention, saliency, object recognition and visual illusions, among other topics. A particular current focus in the department is the investigation of a new framework for understanding vision. In this framework:

- Vision has three stages: encoding, selection, and decoding. Selection means that only a small fraction of visual inputs is selected by visual attention for further processing (decoding or recognition).

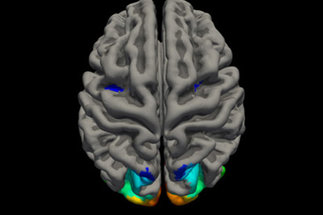

- V1 Saliency Hypothesis (V1SH): in primates, selection starts at the primary visual cortex, V1, which creates a bottom-up saliency map to guide attention or gaze positions exogenously.

- Starting at the output from V1, a smaller and smaller fraction of visual input information is transmitted further downstream for deeper processing.

- The Central-Peripheral Dichotomy: feedback from higher to lower visual areas such as V1 to aid visual recognition is mainly directed at the central visual field, which is typically within the attentional spotlight.